Although AI holds intense assurance for enterprises, there has been a significant challenge in launching AI models into production environments. Several AI-based projects have been observed to fail in reaching the initiation stage to deliver real value to business; this is mainly due to restrictions in traditional AI frameworks.

Table of Contents:

- Introduction

- Traditional AI stacks are siloed, slow, and not built for modern AI workloads

- What is Snowflake Openflow?

- Enterprise AI Challenges: What’s Holding Your Business Back?

- Snowflake Openflow’s Integration Power

- How does Snowflake Openflow offer a competitive advantage to enterprises?

- How to Set Up Snowflake OpenFlow

- Conclusion: Snowflake OpenFlow – The True Unification of Data & AI

Here’s the real question: why do the majority of enterprise ai models do not reach the production stage?

Well, there are several factors that can be considered responsible for this AI-not-reaching-production-stage situation.

-

Insufficient AI-ready data: Data, which primarily forms an integral part of AI model development, might not be in the correct format, incomplete, or outdated; thus, hindering its development.

-

Complexity in deployment: Transferring an AI model from production to a fully controlled environment comes with various challenges in terms of integration and operations that are often not taken into consideration prior to the brainstorming of AI models.

-

Absent governance and scaling roadmaps: There can be instances wherein organizations do not possess the proper strategy for dealing with AI models post their deployment. Such scenarios result in the models not reaching the production stage.

-

No sync with business objectives: AI model projects often fail to reach the production stage if they are not in sync with business objectives or the expectations from that model are quite unrealistic.

-

Lack of proper skills: Insufficient expertise in AI models can also prevent the models from reaching the production stage. The lack of equipment also prevents employees from adapting to the changing AI model framework.

Traditional AI stacks are siloed, slow, and not built for modern AI workloads

Traditional data frameworks usually struggle to catch up with the demands set up by modern AI systems. They are generally designed to go along with structured data and batch processing; however, traditional data architecture cannot meet the requirements of data types and real-time ingestion. Even sufficient engineering skills are required for scattered data pipelines, which might result in raising hurdles in AI deployments.

Introduction of Snowflake Openflow and How it Redefines Enterprise AI

Snowflake Openflow has been designed to work in conjunction with several data types such as structured, unstructured, and steaming. It also facilitates transfer of data from various databases such as MySQL, SQL Server, PostgreSQL, or even SaaS applications such as Slack, Workday, and Hubspot.

In simple words, Snowflake Openflow focuses on attending various challenges by offering a single, unified, and scalable data integration service. Designed upon the Apache NiFi framework, Openflow streamlines and speeds up the overall data movement process, thereby making it easier for developing data for AI workloads.

Here’s in what way Openflow redefines how enterprises build and scale AI:

-

Openflow offers a singular platform for connecting virtually to any application or database while supporting all data types.

-

Seamless integration is one of the several features of Snowflake Openflow. It allows Openflow to be incorporated with any of the existing snowflake ecosystems, along with all the existing security, easy deployment, and governance controls.

-

Moreover, Openflow is potent in managing complete data journeys, right from ingestion to analysis, to make sure that the data is available for ML & AI models. It lets users develop customized connectors and flows while adapting to the constantly evolving AI requirements.

-

Openflow supports deploying pipelines within the customer’s VPC (BYOC) or on Snowflake’s infrastructure, offering flexibility and control.

-

With enhanced AI capabilities, Snowflake Openflow facilitates continuous ingestion of multimodal data for AI, including capabilities for pre-processing and parsing unstructured data using Snowflake Cortex AI.

By addressing the data integration challenges at the core of AI projects, Snowflake Openflow empowers enterprises to move beyond experimentation and successfully deploy AI models into production, driving real business value and accelerating AI innovation.

What is Snowflake Openflow?

Snowflake Openflow, a data integration service designed by Snowflake with Apache NiFi as its foundation. Openflow was unveiled during the June 2025 Snowflake Summit.

Openflow provides a scalable, secure, and versatile platform for shifting data from one source/destination to another within the Snowflake data cloud.

Core Functionality of Snowflake Openflow

-

Unified data integration: Openflow delivers data from multiple sources and in various formats like batch, structured, unstructured, & streaming, into a single platform.

-

Data extraction & loading: This service focuses on accumulating data from source systems while loading it into Snowflake database efficiently.

-

Data transformation: Openflow offers strong capabilities for data transformation through its implementation of a library of processors, that lets users alter, filter, and improve data within the pipeline.

-

Data movement & routing: Openflow allows easy management of data flow, facilitating bi-directional movement between the source and the destination.

Key Features of Snowflake Openflow

-

Open & extensible: Through implementation of Apache NiFi, Openflow allows users to create & extend processors for connecting with different data sources and destinations.

-

Hybrid options for deployment: Openflow facilitates flexibility with the BYOC (Bring Your Own Cloud) deployment model or fully managed deployment within Snowflake’s infrastructure.

-

Continuous multimodal data ingestion: Supports near real-time ingestion of various data types, including unstructured data, to prepare data for AI processing and applications like chat with your data experiences using Snowflake Cortex AI.

-

Enterprise-Grade Security and Governance: Integrates with Snowflake’s security model, offering features like fine-grained roles for access control and ensuring data compliance.

-

Unified User Interface: Provides a web-based interface integrated within Snowflake’s Snowsight UI for designing, managing, and monitoring data pipelines.

-

Extensive Connector Catalog: Offers a wide range of pre-built connectors for popular data sources, including SaaS applications, databases, and streaming services.

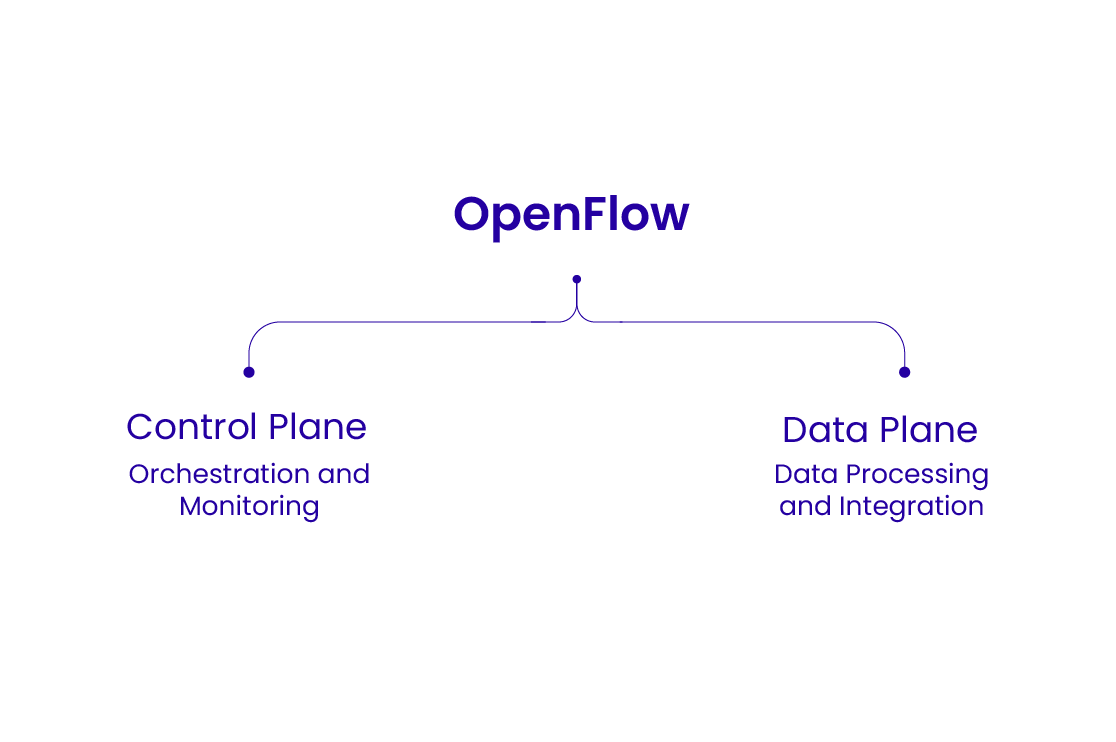

Architecture of Snowflake Openflow

Snowflake OpenFlow utilizes a two-stage architecture, a Control Plane and a Data Plane. The Control Plane, managed within Snowflake’s environment, handles the orchestration, management, and monitoring of data flows, while the Data Plane executes the actual data processing and integration tasks.

The architecture of Snowflake Openflow revolves around two major components:

Control Plane: This component operates as a managed service within the Snowflake platform and is accessed through the Snowsight user interface. It is responsible for tasks like provisioning, managing, and monitoring the data plans, and providing visibility into data flows. The Connector Catalog, enabling users to explore available connectors, and includes observability features to monitor data flow performance.

Data Plane: This is where the actual data processing and movement, or the execution of data pipelines, takes place. Data plane facilitates execution of pipelines based on Apache NiFi. These can be deployed either within the customer’s Virtual Private Cloud or as a containerized service within the Snowflake infrastructure, using SnowPark Container Service.

Enterprise AI Challenges: What’s Holding Your Business Back?

Despite the explosive growth and promise of enterprise AI, a significant number of models never make it past the prototype stage—or worse, fail in production. Why? Because the foundation beneath these AI ambitions is often shaky. Enterprises grapple with a maze of challenges that stall scalability, erode trust, and inflate costs.

From poor data quality and fragmented systems to hallucinating LLMs and model degradation, the roadblocks are real—and recurring. Understanding and addressing these pain points is not just a technical imperative, but a strategic necessity for any organization aiming to reveal true value from AI.

Here’s an elaboration on the listed enterprise AI bottlenecks:

-

Data quality & readiness: This refers to the crucial need for accurate, complete, consistent, and well-structured data to train and operate AI models effectively. Poor data quality can lead to inaccurate predictions, biased outcomes, and ultimately, failed AI initiatives. Several organizations often struggle in the development of enterprise data for AI, usually involving significant manual effort.

-

Unstructured data: Documents, emails, images, videos, and other data formats that don’t fit neatly into traditional database structures are referred to as unstructured data. Although this specific type of data contains valuable information, it does not possess the predefined model, making it difficult for processing & evaluating via traditional tools.

-

Model degradation: This refers to the gradual fall in the performance of an AI model in conjunction with the change in data it encounters during the production process. This leads to incorrect predictions, defective performance, and loss of trust in AI systems.

-

Scalability: This refers to the hurdles encountered in increasing AI models & solutions throughout the entire enterprise to manage increased data volumes, user demands, and computational tasks. Failure to attend to issues related to scalability can lead to performance bottlenecks, increased costs, and ultimately, failed AI deployments.

-

Data privacy & security: This revolves around the protection of sensitive data used by AI systems to ensure legal and ethical data handling, preventing unauthorized access, misuse, and bias. Privacy breaches can result in various mishaps such as legal penalties, financial losses, damage to reputation, legal penalties, and loss of trust. Since AI systems often handle sensitive information, increasing the risk of data breaches, data misuse, and bias is a problem.

While the challenges of unstructured data, hallucinations, and scalability can seem daunting, they are far from insurmountable. Forward-looking enterprises are already turning these roadblocks into steppingstones by embracing modern and responsible AI frameworks. The future of enterprise AI isn’t just about building smarter models—it’s about building a smarter foundation.

Snowflake Openflow’s Integration Power

Snowflake OpenFlow is renovating the way enterprises build, deploy, & manage AI/ML workflows. It’s designed for directness, scalability, and speed while removing hurdles/challenges between data, models, and outcomes.

But what truly sets Openflow apart is its ability of integration. Let’s break down and learn how OpenFlow connects effortlessly with the leading tools and platforms in the AI/ML ecosystem.

AI/ML Development & Model Management Tools

OpenFlow integrates natively and flexibly with top open-source tools used by data scientists and ML engineers:

Hugging Face: This tool employs pre-defined transformer models and adjusts them directly on enterprise data within Snowflake. OpenFlow allows the model orchestration and inference at scale without any movement of data.

LangChain: This tool builds LLM-powered applications that can interact/communicate with Snowflake’s data in real time. OpenFlow supports pipelines that include logic for contextual retrieval and orchestration.

Ray: OpenFlow promotes distributed training workloads to run efficiently by implementing Ray with data managed in Snowflake.

MLflow: Track experiments, manage model versions, and deploy ML models within the Snowflake ecosystem. OpenFlow bridges MLflow pipelines with Snowflake Cortex for scalable model inference.

Snowflake-Native Frameworks

Snowflake OpenFlow works hand-in-glove with native Snowflake AI & development environments:

Snowflake Cortex: Use OpenFlow to operationalize models via Cortex APIs. Herein, you can define and trigger workflows for classification, forecasting, or embedding generation directly within Cortex using minimal code.

Snowpark: Connect Snowpark DataFrames to OpenFlow pipelines for preprocessing, transformation, and feature engineering. It does not matter whether the code is in Python or Java; you can perform easy maintenance and storage within Snowflake.

Visualization & Application Frameworks

Turn insights into interactive apps with ease:

Streamlit: With Streamlit in Snowflake and OpenFlow, you can deploy low-latency data apps that invoke real-time ML predictions. Combine ML workflows with user-facing dashboards to democratize AI.

Cloud-Based AI/ML Platforms

OpenFlow is designed to play well in multi-cloud AI environments, enabling hybrid workflows across cloud-native ML platforms:

Amazon Bedrock: Call foundation models from AWS via OpenFlow connectors. Perform prompt engineering or RAG (retrieval-augmented generation) on Snowflake data using Bedrock APIs.

Azure ML: Schedule and monitor Azure ML experiments using OpenFlow while reading and writing to Snowflake tables—ideal for enterprises running on Microsoft ecosystems.

Databricks: Create collaborative ML workflows by linking Databricks notebooks and Lakehouse features with Snowflake’s governed data via OpenFlow. Combine Databricks’ ML runtime with Snowflake’s secure data platform.

With Snowflake OpenFlow, organizations don’t have to choose between flexibility and control. They can build once and get it deployed everywhere, seamlessly operate throughout different clouds & ecosystems, and ensure that the data is secure and properly governed in Snowflake. Whether you’re scaling LLMs, training models on petabyte-scale data, or delivering insights via apps—OpenFlow connects it all.

How does Snowflake Openflow offer a competitive advantage to enterprises?

Competitive Advantage with Snowflake OpenFlow

Snowflake OpenFlow isn’t just another orchestration tool—it’s a strategic advantage that helps enterprises move faster, innovate smarter, and scale with confidence. Here’s how it sets you apart:

Snowflake OpenFlow offers enterprises a decisive competitive advantage in the race to scale AI and machine learning. By streamlining the entire AI/ML lifecycle—from data ingestion to model deployment—within a single, governed platform, OpenFlow significantly reduces time-to-market innovation. Enterprises no longer need to rely on fragmented tools or move data across environments, eliminating inefficiencies and reducing risk.

A key strength of OpenFlow lies in its seamless integration with best-in-class tools across the AI/ML ecosystem. It connects effortlessly with popular frameworks such as Hugging Face, LangChain, Ray, MLflow, and visualization tools like Streamlit. It also bridges with leading cloud-based platforms like Amazon Bedrock, Azure ML, and Databricks, giving teams the freedom to innovate using the tools they already trust—without vendor lock-in.

By running workflows closer to the data, organizations benefit from lower infrastructure and maintenance costs. Real-time integration with tools like Streamlit also means faster decision-making, turning AI outputs into interactive applications and immediate business impact.

In a fast-evolving AI landscape, Snowflake OpenFlow empowers enterprises to innovate faster, govern smarter, and execute AI strategies at scale—making it a true game changer for data-driven transformation.

How to Set Up Snowflake OpenFlow

Setting up Snowflake OpenFlow involves a series of structured steps designed to orchestrate and automate LLM-powered workflows directly within the Snowflake ecosystem.

The first step is to prepare your environment. Ensure that your Snowflake account has access to Cortex and Snowpark. You’ll also need to select a virtual warehouse with adequate compute capacity for AI workloads and assign proper roles and permissions to users or services—this includes USAGE, CREATE, and EXECUTE rights on OpenFlow and related resources such as stages, tables, and UDFs.

Next, clearly define your workflow objective. OpenFlow is designed to help automate tasks such as summarizing support tickets, generating product descriptions, running sentiment analysis, or orchestrating full AI agent workflows. You’ll create the pipeline using the CREATE FLOW statement. You can use built-in Cortex functions like AI.SUMMARIZE() or custom Python UDFs developed in Snowpark.

After defining the flow, you’ll test it using the EXECUTE FLOW command and monitor its behavior using Task History and Snowflake logs. Proper error handling, logging, and timeouts should be incorporated for production-level robustness. Once tested, flows can be scheduled using Snowflake Tasks or triggered dynamically via APIs or data events.

Several key factors must be considered during setup. From a security standpoint, OpenFlow offers the advantage of keeping AI workflows entirely within Snowflake, eliminating the need to move sensitive data across platforms. It’s critical to enforce RBAC and auditing to maintain governance over who can run or modify flows. Performance tuning is essential; monitor execution times and consider caching repetitive model outputs to save compute time.

Finally, evaluate whether OpenFlow is the right tool for your specific use case. It is best suited for orchestrating generative AI tasks, automating data & AI pipelines, and simplifying collaboration between data and AI teams.

Conclusion: Snowflake OpenFlow – The True Unification of Data & AI

With OpenFlow, enterprises can seamlessly build, govern, and scale AI workflows using the same platform they trust for data warehousing, analytics, and governance. OpenFlow unifies structured and unstructured data, Cortex models, Snowpark compute, and workflow automation into one cohesive environment.

CXO Takeaway

For CIOs, CDOs, and CTOs, Snowflake OpenFlow is the missing link between enterprise data and operational AI. It empowers your teams to move from experimentation to production—securely, scalably, and cost-effectively. The result is a smarter enterprise where AI becomes a native capability, not a bolt-on feature, driving measurable ROI across business units.

FAQs

What’s the difference between OpenFlow and Snowflake Cortex? Snowflake Cortex provides pre-built large language models (LLMs) and functions (like summarization, translation, and Q&A) that can be used directly within SQL or Python. lSnowflake OpenFlow, on the other hand, is an orchestration framework that lets you build and automate multi-step AI workflows—which may include Cortex functions, custom logic, and control flows like loops and conditionals. Think of Cortex as the engine, and OpenFlow as the automation layer that drives workflows end-to-end.

Can I deploy custom-trained models? Yes. Snowflake supports custom model deployment through Snowpark using Python, and via external functions that can call out to remote endpoints or model APIs. OpenFlow can invoke these UDFs or external models as part of a pipeline, allowing you to blend pre-trained models (via Cortex) with your proprietary or fine-tuned models.

What languages are supported? OpenFlow uses declarative SQL-based syntax to define pipelines, and supports functions written in SQL, Python (via Snowpark), and JavaScript. You can also integrate models or logic hosted in other environments via HTTP external functions.

Does it support real-time use cases? OpenFlow is designed for near real-time and batch processing, not millisecond-level latency. However, by combining OpenFlow with Snowflake Tasks, event triggers, or APIs, you can enable event-driven or scheduled AI workflows that support most real-time business needs—such as summarizing customer tickets as they come in or generating responses based on fresh data.

How snowflake automates data quality, lineage & policy enforcement for large enterprises?

How snowflake automates data quality, lineage & policy enforcement for large enterprises?  How Snowflake Simplifies ETL for Multi-source Enterprise Data

How Snowflake Simplifies ETL for Multi-source Enterprise Data  Databricks Data Intelligence Platform: How It Will Reshape Enterprise Analytics in 2026

Databricks Data Intelligence Platform: How It Will Reshape Enterprise Analytics in 2026  Empower Data Flow with Microsoft Fabric Connectors: A Quick Guide for Data Engineers

Empower Data Flow with Microsoft Fabric Connectors: A Quick Guide for Data Engineers