Is your enterprise data sitting idle in Snowflake? Are your AI projects stuck in endless pilots with little impact? Are you finding it too costly and unpredictable to scale AI infrastructure outside of Snowflake, with ROI still uncertain?

Snowflake Cortex is a suite of fully managed AI and machine learning (ML) capabilities within the Snowflake Cortex Analyst systems. It allows users to leverage large language models (LLMs) and ML models directly within Snowflake AI workflows for tasks like analyzing unstructured data, generating insights, and automating workflows.

Table of Contents

Essentially, it empowers users to perform AI-driven analysis without needing extensive AI expertise or moving data outside of Snowflake.

A quick look into the key capabilities of Snowflake Cortex AI Agents:

-

LLM Functions

-

ML Functions

-

Cortex Search

-

Cortex Analyst

-

Cortex Agents

-

AI Observability

-

Hybrid Querying

-

Multi-Step Reasoning

In essence, Snowflake Cortex AI simplifies the process of using AI and ML by making it accessible to all users since it doesn’t require specialized expertise with AI & Large Language Models, making it easy for anyone to leverage these technologies. It allows users to easily access and utilize AI capabilities within their existing Snowflake Cortex Analyst environment, without moving data.

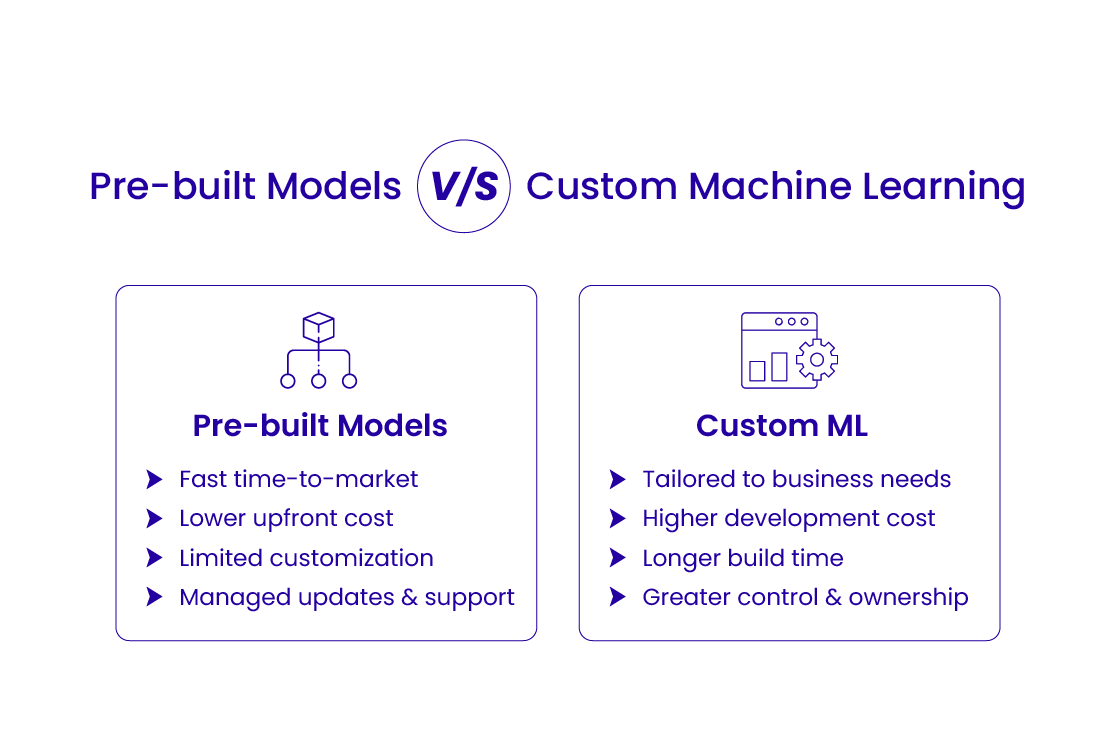

Pre-built Models vs. Custom Machine Learning

The decision to use pre-built models (including SQL ML Functions and Cortex Agents) versus custom-trained models in Snowflake ML depends on the specific use case, required flexibility, and available resources.

When to use SQL ML Functions and Snowflake Cortex AI Agents (Pre-built Models)

For common ML tasks like time-series forecasting, anomaly detection, or classification where a quick, out-of-the-box solution is sufficient. SQL ML Functions provide a straightforward, SQL-based interface, enabling analysts to leverage AI & Machine Learning Solutions.

Snowflake Cortex AI Agents are ideal for building conversational AI applications, automating tasks, or enabling intelligent interactions with data through natural language, especially when leveraging large language models (LLMs) like Anthropic’s Claude 3.5 Sonnet.

When to use Custom-Trained Models

When the problem requires highly specialized models tailored to unique business rules, data structures, or performance requirements that pre-built functions cannot adequately address. For implementing complex ML algorithms, deep learning models, or custom architectures that are not available as pre-built functions. Also, precise control over model training, hyperparameter tuning, and performance optimization are crucial.

In short, start with pre-built options if they meet your needs for simplicity, speed, and standard use cases. Otherwise, go for custom-trained models: when facing complex, specialized problems requiring advanced techniques, fine-grained control, or integration with specific open-source ML frameworks.

Pro Tip: Pre-built models promise speed and cost-efficiency, whereas custom ML facilitates deep differentiation and competitive advantage. The correct Snowflake consulting and implementation helps enterprises balance time-to-market with long-term innovation, ensuring AI aligns with strategic business priorities.

Recommended Reading:

- Snowflake Openflow: The New Highway for Enterprise AI

- Snowflake Cortex Agents: Scalable AI for Enterprise Data Insights

- Generative AI & Snowflake: How it is Driving the No-Code AI Future?

- AI App Development with Snowflake: Understanding the Enterprise Shift to AI-Powered Applications AI

- AI’s Role in Revolutionizing the Fintech Industry

- AI Data Analytics: The Road to Data Efficiency and Accuracy

- AI Applications Across Industries: How AI is Driving Innovation to Solve Business Problems

- Generative AI Strategy to Execution: Build GEN AI Powered Insights with Snowflake & AWS

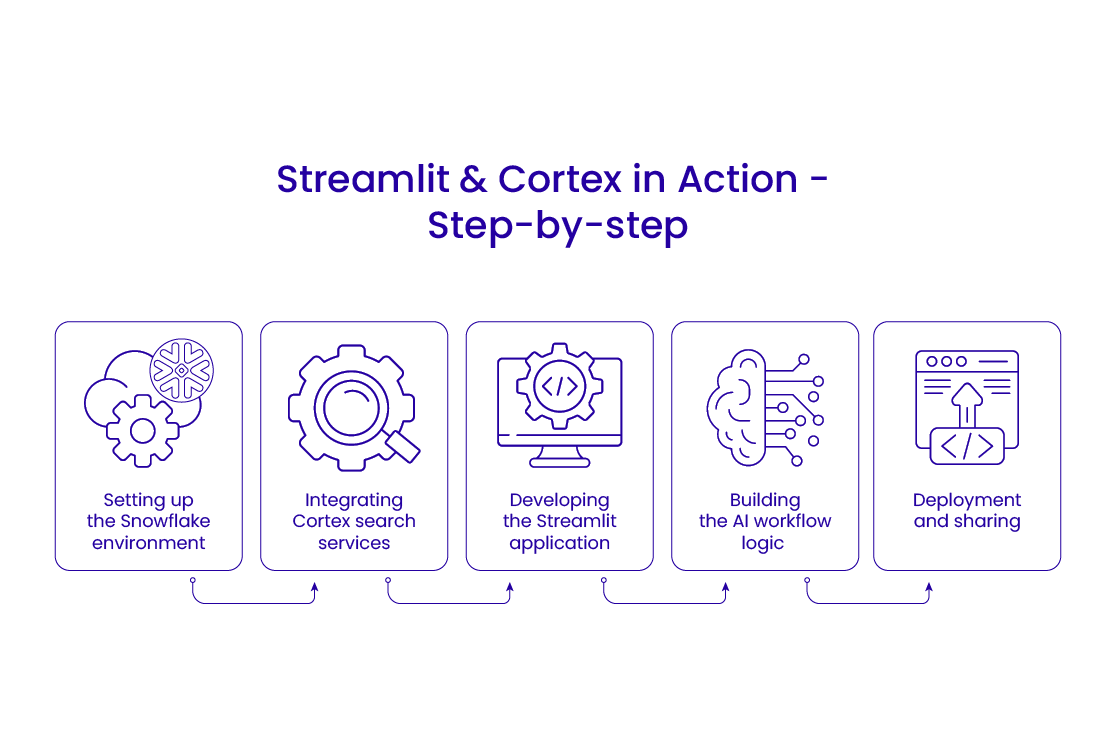

Building AI Workflows: Streamlit and Cortex in Action – Step-by-step

Here’s a breakdown of the steps involved in building an AI-powered application workflow using Streamlit for the frontend and Snowflake Cortex AI Agents for backend functionalities like search and large language models:

-

Setting up the Snowflake environment

-

Integrating Cortex search services

-

Developing the Streamlit application

-

Building the AI workflow logic

-

Deployment and sharing

Note: The exact code and implementation details will depend on the specific AI task, data, and desired user experience. Snowflake AI workflows provide tutorials and documentation to guide you through building various types of AI applications, including chatbots and semantic search tools, using Streamlit and Snowflake Cortex Analyst.

Performance Tuning & Security Best Practices

Performance tuning

-

Focus on writing efficient SQL queries by minimizing complexity, utilizing appropriate operators, and avoiding full table scans.

-

Use indexes effectively on frequently queried or joined columns to expedite data retrieval.

-

Employ suitable data types, normalize data to reduce redundancy, and consider partitioning or sharding large tables to distribute data and improve query performance.

-

Optimize database parameters like buffer sizes and allocate sufficient CPU, memory, and disk resources. Implement connection pooling to minimize connection overhead.

Cost control

-

Choose appropriate hardware or cloud tiers, and utilize features like autoscaling and load balancing, so that organizations can avoid over-provisioning and optimize resource allocation, reducing infrastructure costs.

-

Leverage cloud cost management tools to analyze usage patterns, compare pricing models, and identify areas for cost reduction.

-

Moving old or less frequently accessed data to cheaper storage tiers or utilizing data compression features can significantly reduce storage expenses.

Security best practices

-

Implement the principle of least privilege, granting users only the minimum necessary access for their tasks.

-

Enforce strong authentication mechanisms, including strong password policies, multi-factor authentication (MFA), and secure password hashing.

-

Encrypt sensitive data both at rest (stored on devices) and in transit (during network communication).

-

Conduct regular database security audits to identify vulnerabilities, assess compliance, and verify the effectiveness of security controls.

-

Implement network security measures like firewalls to restrict unauthorized access to the database server.

By strategically combining these performance tuning and security best practices of Snowflake Cortex AI Analyst with a focus on cost control & robust data governance along with Advanced Analytics & AI, organizations can optimize their databases for efficiency, security, and scalability, maximizing their return on investment in data assets.

General Machine Learning (ML) Deployment Example

This practical demo aims to walk through a comprehensive end-to-end deployment, encompassing setting up the deployment environment, leveraging semantic models, orchestrating the deployment process, and finally deploying the solution.

Scenario: Deploying a machine learning model as a service

Let’s imagine we’re deploying a machine learning model that predicts customer churn.

Setup

Choose a cloud platform where you would utilize Amazon Web Services (AWS) and its machine learning service, AWS SageMaker, along with Microsoft Learn (Azure) for some components. To establish a proper development environment, install all the required packages such as fastapi, uvicorn, transformers, torch, and python multipart.

Semantic models

For this, you need to define your model. Train your model using your preferred framework. Monitor the experiments and model versions using tools like MLflow. Assess the performance of your model through relevant metrics such as accuracy, precision, and recall.

Orchestration

Use Docker to package the model and its dependencies into a container image. This ensures consistency across different environments. Set up the Continuous Integration and Continuous Deployment (CI/CD) pipelines to automate the building, testing, and deployment of your model.

Deployment

Remember to use the proper deployment strategy. Deploy the model as an online endpoint for real-time inference. If real-time prediction isn’t necessary, deploy the model for batch inference on a schedule. Remember to continuously track the model’s performance in the production environment.

Deploying ML models with Snowflake Cortex AI showcases how enterprises can shift from experimentation to production at scale. This indicates turning predictive analytics into actionable business outcomes like reducing churn, increasing retention, and unraveling new revenue streams.

Pro Tip: Remember, the specific Snowflake Cortex AI Analyst tools and processes will vary depending on your chosen cloud provider, specific requirements, and the complexity of your model and application.

Business ROI – How Snowflake Cortex AI Applies Your ROI Generation

Technology alone doesn’t justify investment—measurable business outcomes do. Snowflake Cortex AI isn’t just about embedding AI into your data stack; it’s about cutting costs, accelerating time-to-market, and unlocking competitive advantage. Here’s how it translates directly into ROI for your enterprise.

Cost Savings Through Consolidation

-

No need for additional infrastructure or shifting data outside Snowflake

-

Removing hidden costs from data duplication, ETL complexity, and third-party AI hosting.

-

Decreasing dependency on specialized AI teams.

Faster Time-to-Market

-

Acceleration of deployment of use cases like churn prediction, forecasting, and anomaly detection.

-

Rapid prototyping and rollout of AI-powered apps directly to business teams.

-

Governance and observability features mean less time spent on compliance approvals.

Competitive Advantage at Scale

-

Natural language querying makes data accessible to every department.

-

Hybrid querying and multi-step reasoning enable advanced insights, allowing organizations to stay ahead of competitors.

-

Custom ML integration ensures proprietary models customized to varying market strategies.

Conclusion

Snowflake Cortex reinvents AI by embedding generative intelligence directly into your data stack. From AISQL to Cortex Agents and Analyst, Cortex fuses governance, flexibility, and scale—all without ever leaving the Snowflake AI workflows.

Whether you’re accelerating insight generation with prebuilt models or delivering bespoke ML through Streamlit-powered workflows, Snowflake Cortex AI empowers your organization to modernize artificial intelligence technologies with confidence and control. With the right Snowflake consulting and implementation service providers like BluEnt

FAQs

What is Snowflake Cortex used for?It’s a suite of AI features (AISQL, Agents, Analyst, Search) that enables LLM-driven apps within Snowflake.

Should I use pre-built Cortex AI functions or custom ML?Use AISQL and agents for quick AI insights; opt for Snowflake ML for tailored, custom-model solutions

Can I build Streamlit apps inside Snowflake AI workflows using Cortex?Yes— Snowflake AI workflows support Streamlit in Snowsight. You can integrate Cortex Analyst, Agents, and Knowledge Extensions

How do I maintain security and governance when using Cortex?Snowflake ensures full security with role-based control, data privacy, governance, and performance tuning

Is there a complete workflow example for deployment?Yes—Snowflake’s “Complete Guide” and Cortex Agents quickstart provide full end-to-end references.

Empower Data Flow with Microsoft Fabric Connectors: A Quick Guide for Data Engineers

Empower Data Flow with Microsoft Fabric Connectors: A Quick Guide for Data Engineers  Build & Elevate Your Enterprise Data Strategy with Microsoft Fabric

Build & Elevate Your Enterprise Data Strategy with Microsoft Fabric  From Insight to Action: How Microsoft Fabric Powers Business Intelligence

From Insight to Action: How Microsoft Fabric Powers Business Intelligence  Microsoft Fabric Data Pipeline Management: Automate Your Enterprise Data

Microsoft Fabric Data Pipeline Management: Automate Your Enterprise Data